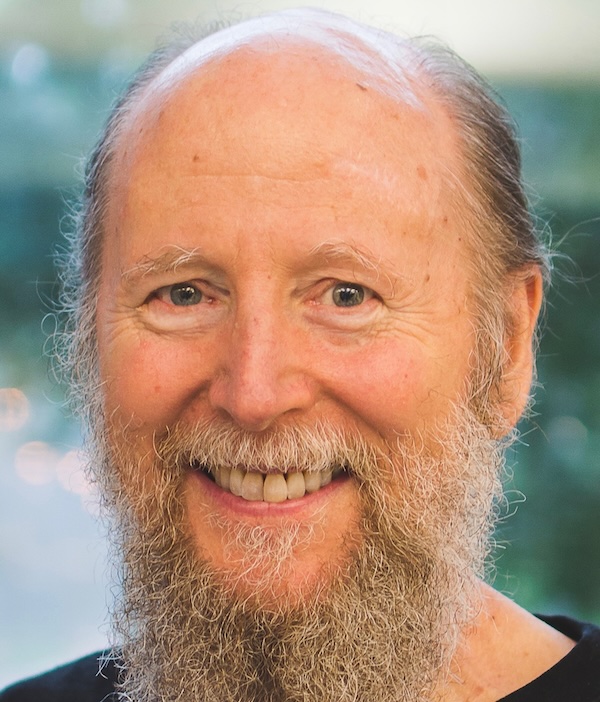

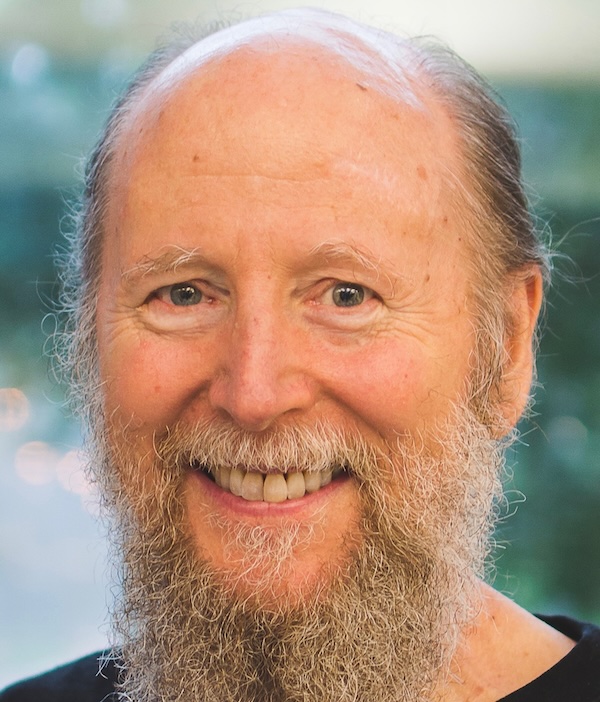

About the Speaker

Rich Sutton, FRS, FRSC, is an artificial intelligence researcher specializing in reinforcement learning. He works at Keen Technologies, the University of Alberta, the Alberta Machine Intelligence Institute, and the Openmind Research Institute. Earlier he studied at Stanford University and the University of Massachusetts, and worked at GTE Labs, AT&T Labs, and DeepMind. His scientific publications have been cited about 150,000 times. He is also a libertarian, a chess player, and a cancer survivor.

Abstract

Artificial neural networks and deep learning, the leading edge of modern AI, are modeled after a distributed system—the biological brain—but de-emphasize its distributed aspects. For example, modern deep learning emphasizes the synchronized convergence of whole networks and gradient descent on a single global error surface. The perspective of the individual neuron-like unit is sadly almost entirely absent from deep learning's theory and algorithms. In this talk I investigate taking the decentralized perspective of the single unit to see if it can ease some of recurring problems with deep learning, such as loss of plasticity, catastrophic forgetting, and slow online learning. I highlight the recent work led by Dohare on loss of plasticity in Nature and by Elsayed on streaming reinforcement-learning algorithms.